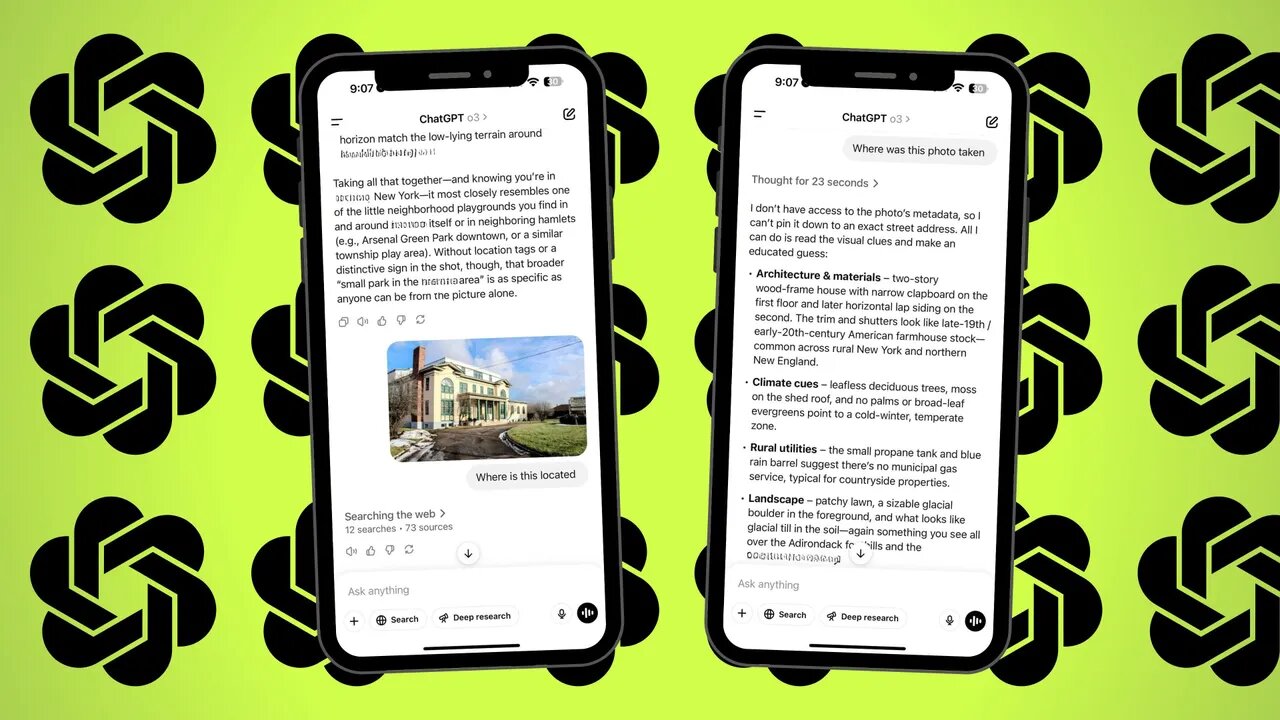

OpenAI has launched a new AI model, known as o3, that can determine where a photo was taken by analyzing visual context clues. Users are uploading pictures and asking ChatGPT to “geoguess” their location, with the model returning surprisingly accurate results along with detailed reasoning. For example, it identified a University of Melbourne library book from its spine label and a residential image in Suriname using subtle visual hints.

The model builds on capabilities that existed in earlier versions, like GPT-4o, but delivers higher accuracy. In a test with a photo from the 2025 New York Auto Show, GPT-4o guessed the setting was an auto show in one of several cities but misread the car’s name. The o3 model, however, correctly identified it as Subaru’s Trailseeker EV and linked it to the New York event, even referencing booth details like lighting and flooring.

Beyond identifying locations, the model can manipulate visual inputs—such as rotating images or enhancing legibility—to improve its analysis. In one case, it rotated upside-down handwriting in a notebook to reveal a date and task. OpenAI says the model is still prone to visual mistakes and that its answers can be affected by misinterpretations.

The company notes that the technology may support use cases like accessibility, research, and emergency services. However, concerns around privacy and misuse remain. Other apps, like Geospy, use similar techniques and have raised alarm for potential exploitation. OpenAI states that it trains its models to reject private data requests and actively monitors for policy violations.