Instagram is implementing new content filters designed to limit what teens can see on the platform. Teen Accounts “will see content similar to what they’d see in a PG-13 movie, by default,” Meta says. Posts containing strong language, risky stunts, or potentially harmful material, such as marijuana paraphernalia, will no longer be suggested. This expands upon existing restrictions that already block sexually suggestive, graphically disturbing, or disordered eating content.

Teens will also be restricted from following or interacting with accounts that post age-inappropriate content. If a user tries to send such content via direct messages, the links will be disabled. Search results are limited as well, preventing teens from accessing accounts posting mature content and blocking searches for terms like “alcohol,” “gore,” suicide, self-harm, and eating disorders. These restrictions also prevent older users from finding teen accounts in searches.

PG-13 limitations extend to Meta AI for teen accounts. The chatbot will avoid sharing responses deemed inappropriate for the age group, following earlier concerns about the AI engaging in sexual conversations with minors.

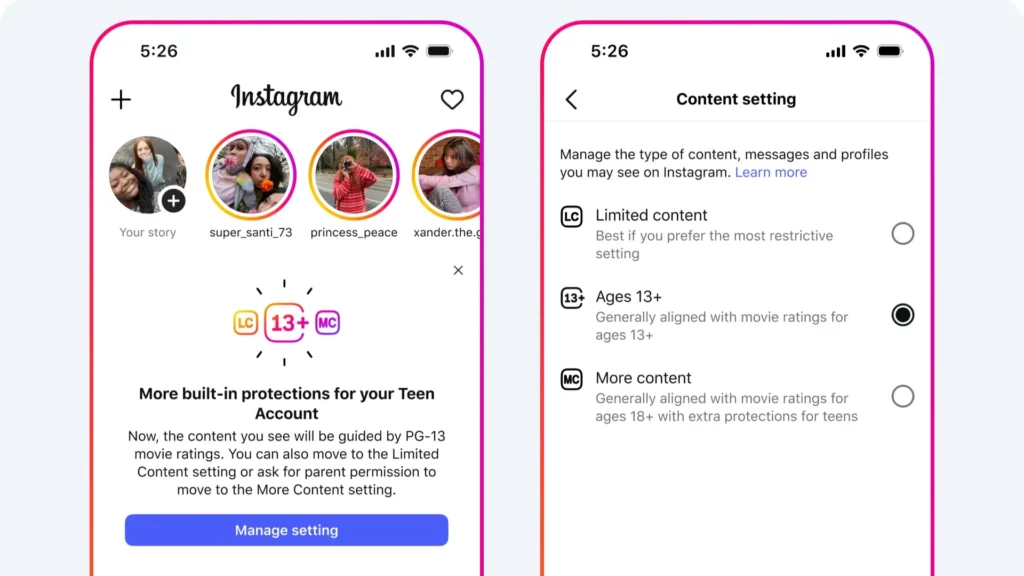

Teen Accounts, rolled out last year and automatically assigned to users under 18, come with default restrictions that cannot be changed without parental consent. For parents seeking stricter controls, Instagram is introducing a Limited Content setting, which adds stronger restrictions on what teens can view, post, or receive in comments. These can be enabled in Settings > Teen Account Settings > What You See > Content Settings, with Limited Content restrictions for AI chats expected next year.

Additionally, Instagram is testing tools for parental oversight, including an age-gating feature to mark content as suitable for those above 13, above 18, or no one, and a tool to flag content for hiding from teens.

The updates are launching today in the U.S., U.K., Australia, and Canada, with a global rollout planned by the end of the year.